The Post-test Debrief: A new superpower for growth designers

An innovative system for transforming A/B testing into customer-centered strategy

Illustration by Iuliana Ladewig in Adobe Firefly

The Post-test Debrief, a collaborative process that transforms test data into actionable insights, can change that. It ensures that A/B tests don’t just become performance numbers on a dashboard but instead help teams connect the key pieces of a broader story, have meaningful conversations about customer behavior, and ensure customer insights are meaningfully integrated into product decision-making. It’s an approach that not only improves efficiency and strategic ideation but also accelerates brainstorming by surfacing key learnings that can shape future experimentation roadmaps.

I'm a staff growth designer on the Adobe Acrobat Growth team, and after five years collaborating across teams and functions, I’ve come to appreciate how much more powerful A/B testing can be when it’s paired with thoughtful collaboration. The Post-test Debrief is one way to turn raw data into shared insight. It can help teams align faster, learn more deeply, and design experiments that aren’t just data-informed but customer-focused.

Why I created the Post-test Debrief

When I joined the Acrobat Growth team, I began conducting user testing to better understand customer pain points when they landed on Acrobat’s pricing pages. While the data offered great insights, it didn’t help triangulate what was happening and why it was happening.

I wanted to understand our customers’ mindsets and whether they had the information they needed to make informed decisions about subscription plans, but it felt like we were testing in a vacuum—it was all raw data. We were generating insights, but because the wrap-up process was minimal, we weren’t connecting them. Once results came in, a summary email would go out, and we’d move on to the next item on the roadmap. There was rarely a moment to pause and reflect.

When it came time to brainstorm and ideate, it felt like trying to find last year’s holiday gift receipts: frustrating, scattered, and ultimately unhelpful. Ideas were pitched without context, and we were often chasing down old results just to understand whether something had already been tested. But with insights scattered across dashboards, Jira, emails, and Slack threads, trying to track down a past test result often required detective-level skills. (“Didn’t we test something similar a few quarters ago? Where are the results of that test?” said every growth team ever.)

The process was time-consuming, frustrating, and inefficient. Imagine writing the same scene in a play over and over—the story would never move forward. More importantly, it was a missed opportunity to use data meaningfully. Without a system to connect past findings to new experiments, we wasted a lot of time. It left me feeling like these ideation sessions could be faster, sharper, and more strategic.

The Post-test Debrief: The missing link between testing and strategy

Growth designers must fully understand the data and the ins and outs of tests so we can make informed design decisions. Too often, tests are labeled “successful” or “not,” with little thought given to what they reveal about user behavior. Here’s the truth: When a test doesn’t "win," it doesn’t mean it failed, it just means you learned something valuable about your customers.

After one A/B test, I gathered the principal manager, Ursula Fritsch, and the data analytics growth manger, Dalton Strayer, and asked if they’d be open to a new approach: a “Post-test Debrief.” This self-invented term is a 30-minute calendar block after every A/B test to do a collective, detailed deep dive into the test and data so we can start knitting a narrative of what’s happening with our customers.

That first Post-test Debrief, which came on the heels of a successful test, was like a highlight reel of valuable insights that could influence future decisions. Instead of burying insights it created a structured way to capture learnings, spark new ideas, and set the stage for faster, smarter brainstorming. It was the secret sauce that turned raw test results into actionable strategies. Even though our test was a big winner, we didn't just look back, we knit a story about what was happening with customers to ensure we're creating delightful experiences through testing.

Growth teams are often expected to produce year-long roadmaps, and the Post-test Debrief has kept us responsive to real-time insights and evolving customer behavior. Imagine you’re planning next quarter’s roadmap, would you rather:

- Scramble through old docs and Slack messages to excavate what worked last time, remember what was tested, or to decide what you should test this time around?

- Open an organized online whiteboard full of past test insights, behavioral patterns, and fresh hypotheses that you can easily jump into and digest because you already noted important information and emerging themes?

Here’s what the second option changed for my team:

- From data points to customer stories. Instead of tracking clicks, we uncover why our customers behave in certain ways. That allows us to start knitting a story about what’s working for our customers and what’s not.

- From siloed insights to shared knowledge. Since everyone has access to test data, single-person knowledge banks cease to exist. The Post-test Debrief gives growth designers a platform to share user testing findings from a UX perspective and opens the door for growth designers to have collaborative, trust-building discussions with product managers and data analysts.

- From slow brainstorming to fast, focused ideation. The Post-test Debrief creates a continuous loop of insights, so teams never start from zero. When it's time to ideate, we open our board with our repository of debriefed tests and pull in all the respective hypotheses, both new ideas and those that build continued customer stories, to test in the upcoming quarter.

How to implement a Post-test Debrief

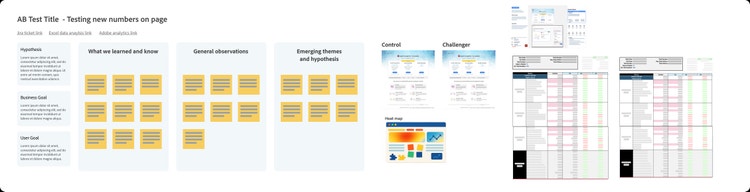

Once test data has matured and stabilized, I add 30–40 minutes to the calendars of Acrobat’s data analyst and product manager. Before we meet, I compile information and data from the Jira ticket and Adobe Analytics, important insights and interesting findings from user testing, as well as hypotheses, business goals, and any other meaningful deep dives.

Who should take part?

If you’re involved in A/B testing, this process is for you. That can mean growth designers, product/growth managers, data analysts and researchers, and almost anyone interested in the process.

Growth designers will get more and better insights into user behavior to design experiences that actually work. The process can help product and growth managers build data-backed strategies instead of taking shots in the dark, while enabling data analysts and researchers to bridge gaps between numbers and actionable insights.

What’s on the agenda?

A structured discussion framework ensures you're not just reviewing data but making it actionable. Meanwhile, a data analytics partner can walk the team through the new information while they take note of test findings and the sparks of new ideas. The goal should be a collaborative discussion, not just a data read out.

What format to use?

Use an online whiteboard so it’s easily accessible to the entire team, allowing anyone in the group to jump onto the board and jot down comments and important information. By capturing the conversation in a searchable, visual repository, the entire team has a living, breathing knowledge bank of past experiments. Focus content into pillars:

- What we know and learned: The facts, the surprises, and the hard data takeaways

- General observations: Broader trends and unexpected patterns

- Emerging themes and hypotheses: What the test suggests and what should be explored next

Ready to try it?

Start small. Pick your next A/B test and schedule a Post-test Debrief. Create a shared space to document key takeaways. Set a recurring cadence so it becomes part of your team’s experimentation process. Encourage cross-functional participation for richer insights. Then watch as your experimentation practice becomes more efficient, insightful, and strategic than ever before.

When A/B testing is human-centered it drives real results

At the end of the day, great design and product decisions aren’t just about data, they’re about understanding people, so we can design delightful products for them. The Post-test Debrief helps teams connect the dots between A/B tests and real user behavior, making every experiment count, and driving real results:

- Faster quarterly planning: No more wasting weeks trying to remember past tests. Teams can dive straight into ideation.

- Stronger hypothesis development: New tests, built on validated learnings, lead to smarter future experiments.

- Richer customer understanding: Tests aren’t just about conversion rates; they also help map user behavior and motivations.

- Higher-quality tests: Instead of testing the same ideas over and over, each experiment moves the strategy forward.

- Proactive instead of reactive teams: Instead of scrambling to figure out “what to test next,” teams have a clear roadmap of ideas grounded in real insights.

Let’s stop running tests in isolation and start weaving data into actual stories about our customers.