Ask Adobe Design: How are you using Adobe Firefly?

How our team is using our generative AI model in their personal and professional projects

Illustration created in Adobe Firefly

With generative workflows in Adobe Photoshop (Generative Fill), Adobe Illustrator (Generative Recolor), and Adobe Express (text-to-image and text effects) Firefly has been changing the way our designers brainstorm, create concept art, and complete repetitive tasks. Adobe Design helped shape the Firefly experience, and our team members are also using the technology in a range of projects, both professional and personal. We spoke with a handful of Firefly power-users from Adobe Design to hear how they're using generative AI in their workflows:

“The ability to create unique Photoshop textures (and generate multiple versions) using Firefly seems exponential.”

Lee Brimelow, Software Development Engineer, Design Engineering

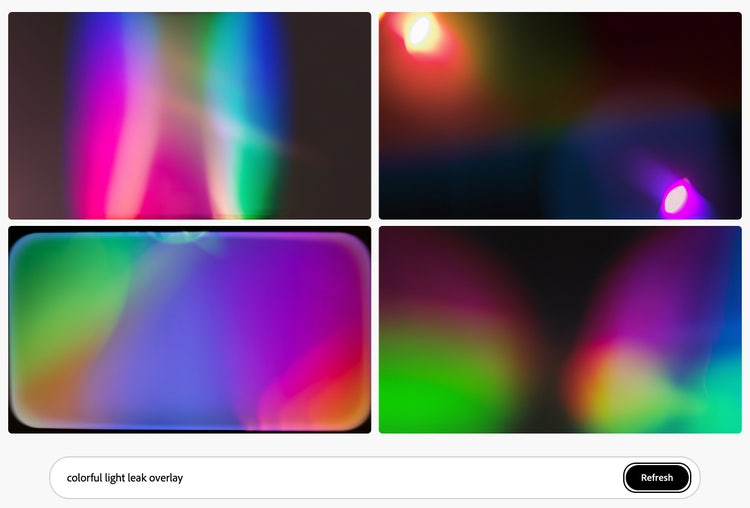

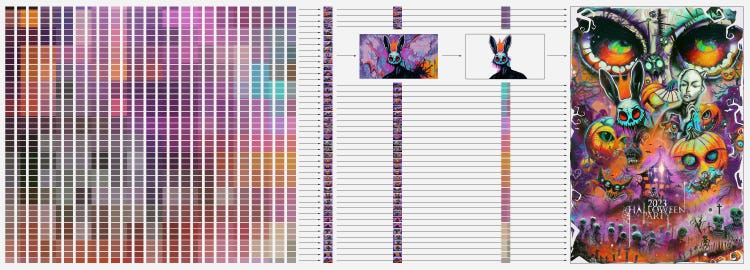

“I’ve spent countless hours, since Firefly was released in beta, creating a wide variety of content that’s ranged from cute and funny images to compelling and beautiful illustrations (creative minds can run wild with it). But I’ve also been trying to create more useful assets that designers and photographers commonly use to augment their work: Photoshop overlay textures, which usually live in a layer above the main content and are overlaid onto it using a blend mode. These textures can be used to adjust a photo’s lighting, to add effects like grunge or grain, and a whole host of other effects. In the example below, I used a rainbow light leak texture to recolor an existing photograph.

“Finding just the right overlay texture can be time-consuming, so the ability to generate them in Firefly is great, and the ability to create unique textures and generate multiple versions seems exponential. The work I’ve been doing only scratches the surface of what’s possible, but I still don’t envision that generative AI will ever replace creative professionals, I see it as empowering them to bring their designs and photographs more easily to the level that’s in their mind’s eye.”

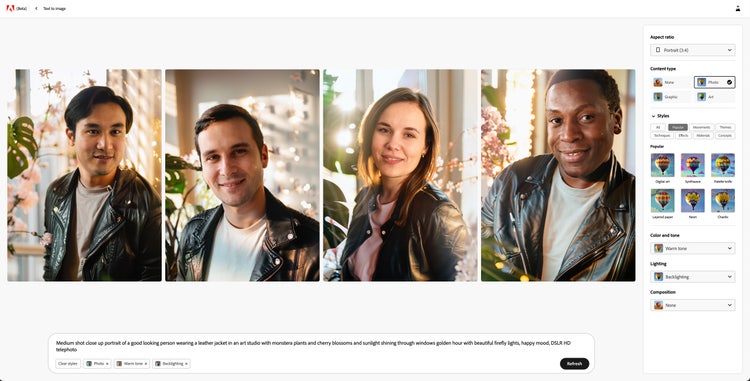

"The ability for Firefly to produce convincing portraiture from scratch is extremely practical, especially when I need unique assets for demos."

Davis Brown, Experience Designer, Digital Imaging

“Working with Firefly to generate photorealistic portraits is of particular interest to me in my creative process. The ability for it to produce convincing, lifelike images from scratch is extremely practical, especially when I need unique assets for demos. The reactions of people when they realize that these convincing images are entirely AI-generated never ceases to amaze me. It's a powerful testament to the advancements in this technology and its potential in the world of art. My process is driven by the ongoing progression of AI so it’s constantly evolving. Every day I'm learning and exploring new creative territories—it's an exhilarating part of my journey as an artist.”

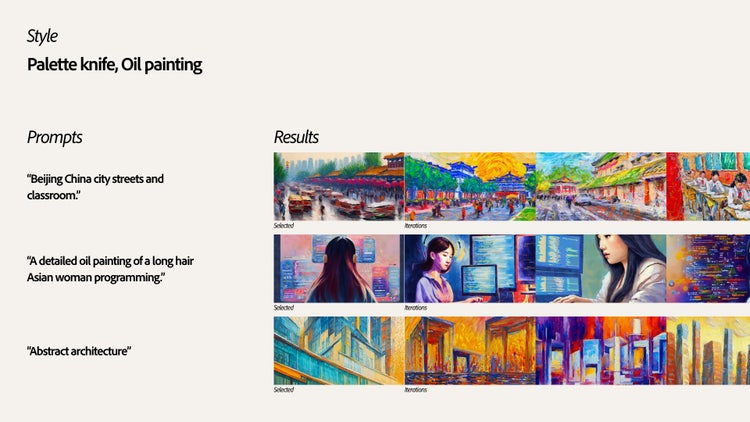

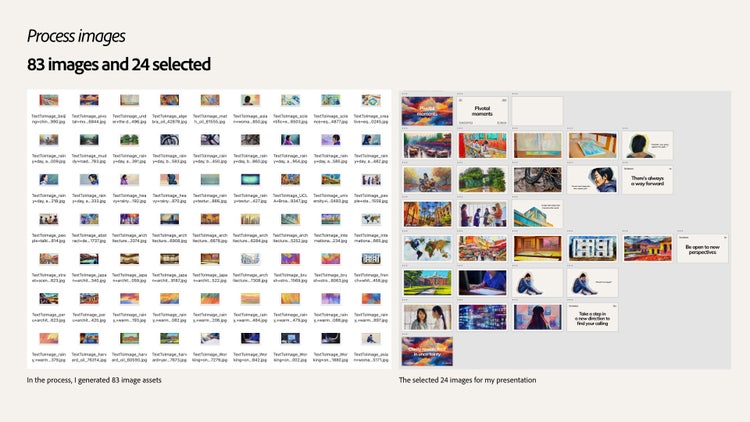

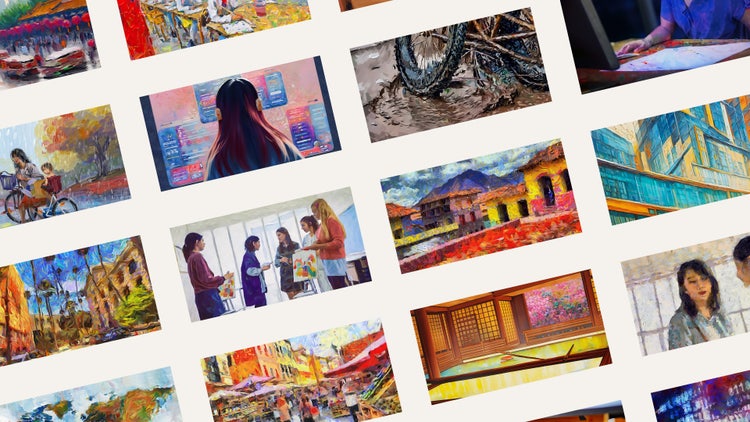

“Firefly has a style engine that’s extremely useful for achieving a consistent aesthetic throughout a collection of images."

Veronica Peitong Chen, Experience Designer, Machine Intelligence & New Technology

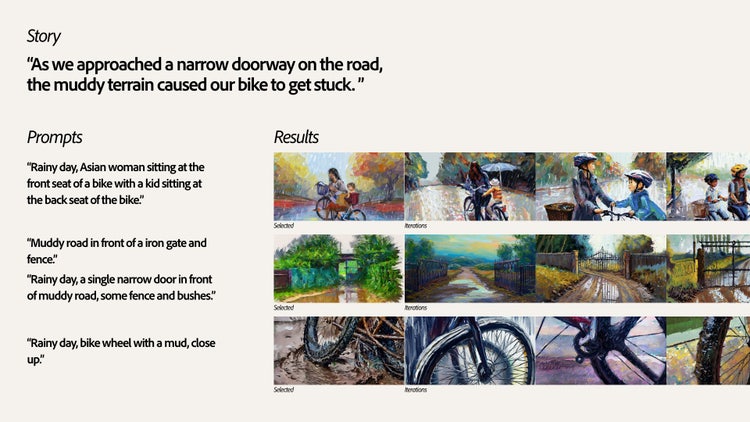

“Imagine you’re asked to paint a portrait of your life story. How would you captivate the audience and make every moment come alive? That was the challenge I faced when preparing for Pivotal Moments, one of Adobe Design’s internal speaker series that gives people an opportunity to share transformative moments from their lives and careers.

“I’d crafted my speech around three pivotal moments that shaped my academic and career journey. As part of the core team building and testing Firefly, it occurred to me that it would be the perfect way to create a visual theme to thread through my story. Firefly empowers storytellers to experiment, refine, and enhance their visuals until they capture the essence of the story they want to tell. With each iteration, I had the opportunity to fine tune the results, adjust the composition, and experiment with visual elements—a process that ensured the results closely matched my memory while effectively illustrating the story.”

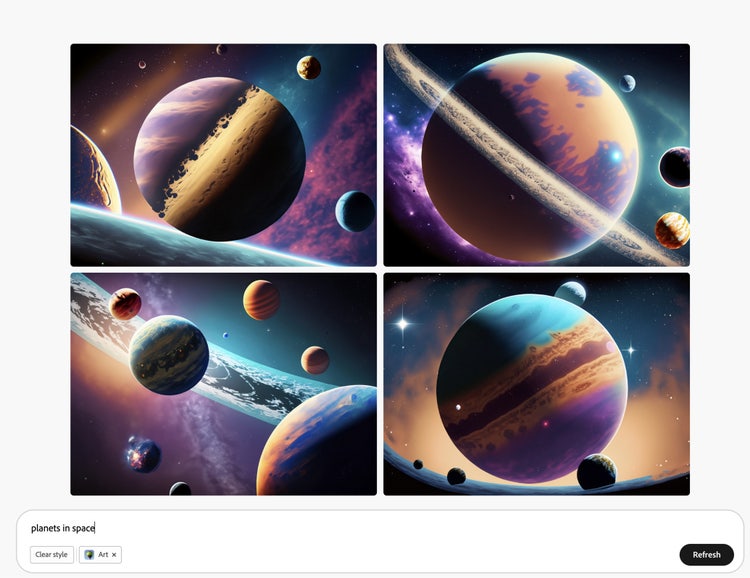

“The ability to create any scene I can dream up has really opened possibilities for the types of stories and analogies I use in internal demos.”

Kelsey Mah Smith, Experience Designer, Machine Intelligence & New Technology

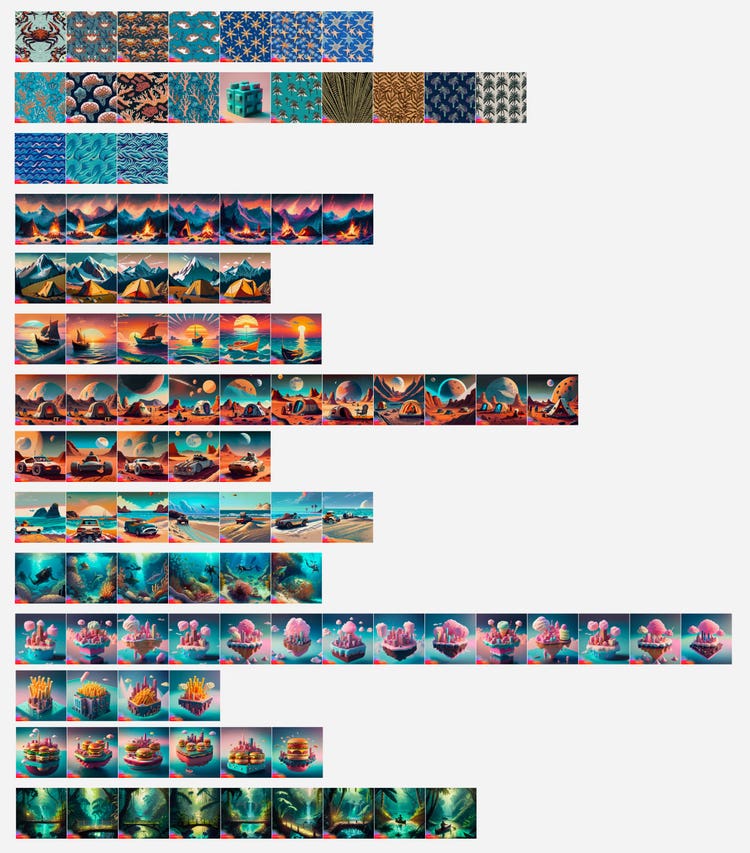

“Part of my job is to shed light on the unknown, to tell stories rooted in research, trends, and an understanding of user needs. I often create decks and designs to share abstract and broad concepts with other teams which require visual analogies to set the stage and strengthen my narrative. Standard deck templates felt stale and didn’t do much to support the variety of stories and concepts that I wanted to convey. Whenever I needed to customize a deck, I would search on stock or create illustrations on my own. It was time consuming to get the right images, textures, and illustrations I needed.

“I’ve been using Firefly for almost all my projects since the beta launched. It’s where I start ideating concepts for what I want my story or visual theme to be. I’ll generate everything from textures, icons to full on visual scenes to help support my stories. From there I download the assets and bring them directly into a design tool where I’ll collage, mask, and layer them. Having the ability to create any scene I can dream up without having to search across stock sites or public domain images has really opened possibilities for the types of stories and analogies I can use. In addition, the time it takes to produce custom assets has reduced while the variety of assets and themes has increased. Essentially, it's faster to be even more creative than I was before, which in the end ultimately helps me get back to designing strategies to make our products and features easy for our customers to use.

“For this particular deck I wanted to use a deep space analogy—to evoke the feeling of the vastness and excitement of the unknown quality of space exploration.”

“I can quickly generate images on a specific theme or concept which not only sparks ideas but helps me explore visuals I hadn’t even considered.”

Tomasz Opasinski, Creative Technologist, Machine Intelligence & New Technology

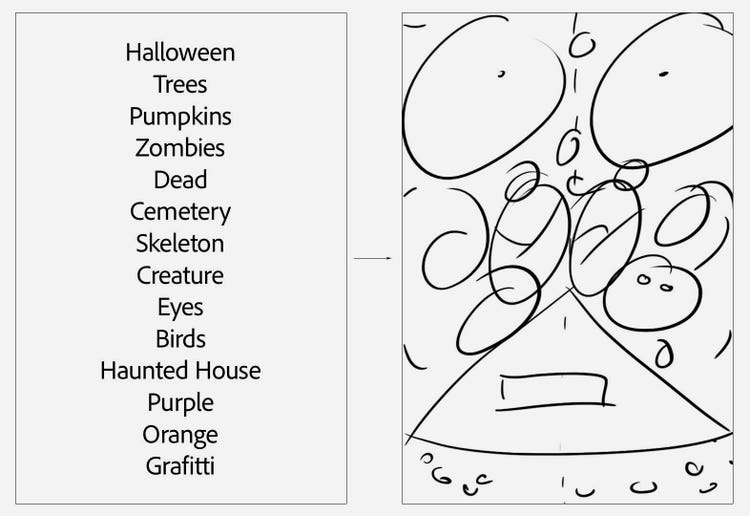

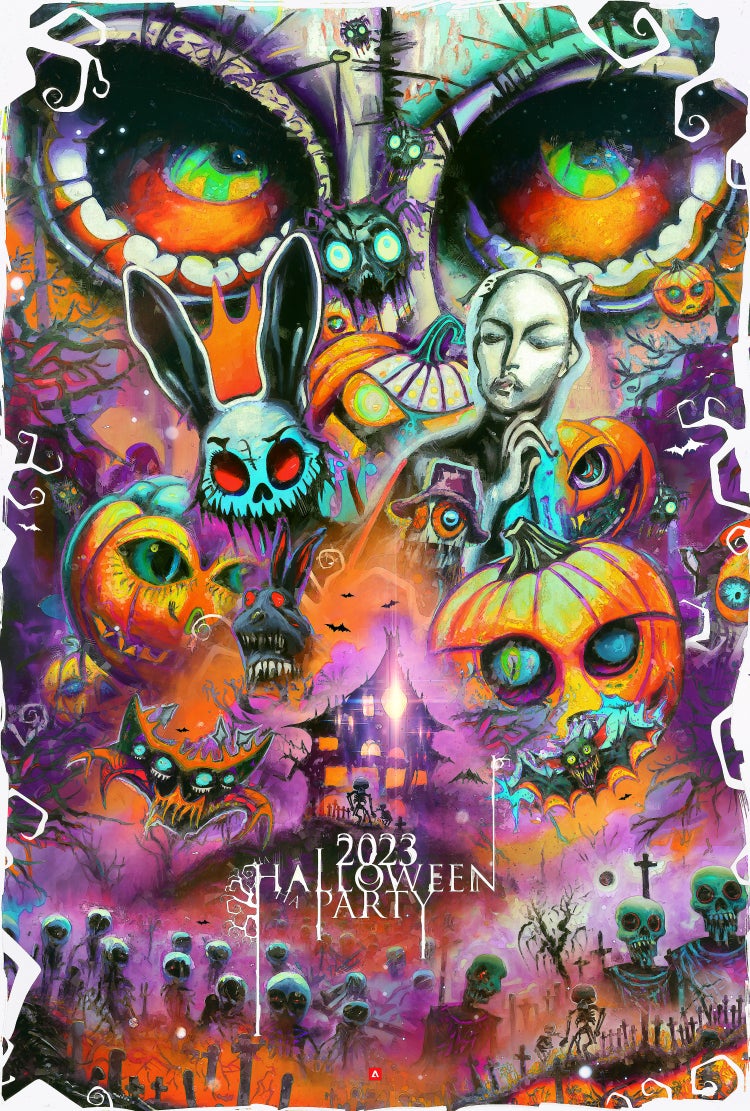

“One of the most exciting possibilities of Firefly is its ability to aid in the ideation process. As a creative (prior to Adobe I was a poster designer and was part of more than 560 theatrical, streaming, TV and video game campaigns) I always feel a need to be generating fresh ideas, so I experiment a lot. Firefly quickly generates images on a specific theme or concept which not only sparks ideas but helps me explore visuals I hadn’t even considered—it’s like having a design assistant tirelessly generating concepts and visual references, freeing up my time and mental energy to focus on refining and executing my vision.

“I don’t think I’ll ever see generative AI as a replacement for human creativity and ability; for me it’s a tool for exploring ideas, speeding up workflows, and generating high-quality assets. I recently used Firefly to assist in the creation of a poster for a Halloween party, with themed characters, pumpkins, haunted houses, and appropriately scary background images.”

“Before Firefly, if my content required variations, I would have to manually modify them—each pattern, color, alteration was a separate task.”

Heather Waroff, Senior Experience Designer, Extensibility

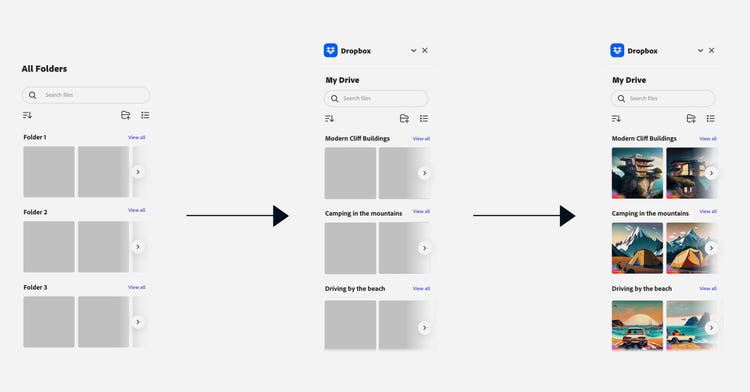

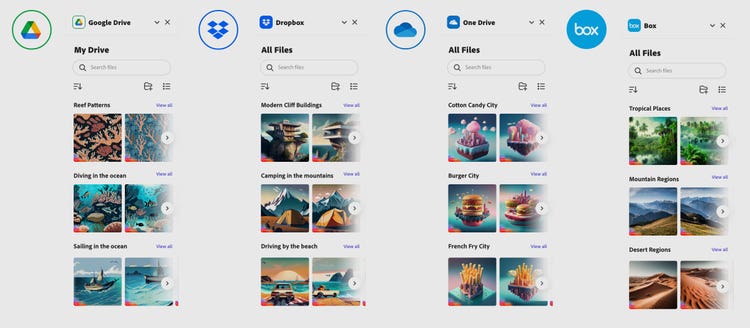

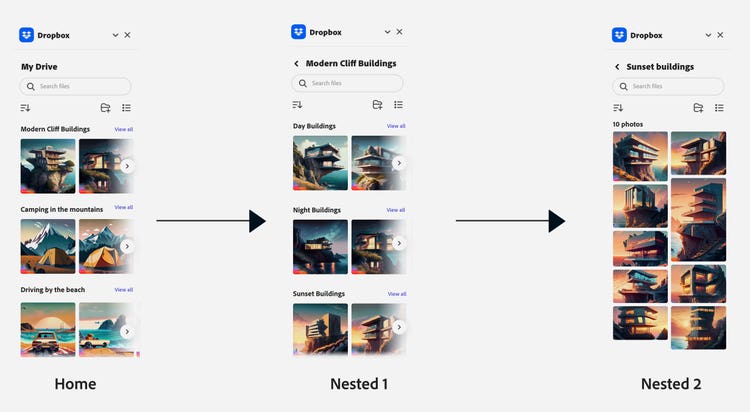

“As a designer on the Extensibility team (where we explore how to add new functionality to our applications without changing core functionality and how to embed Adobe tools and assets into third-party applications) I’m often asked to envision how experience interoperability works across Adobe. Doing this requires telling a workflow story that encompasses a user’s entire journey—from first interaction to accomplishing a goal—when interacting with our products or services.

“By telling a visual story of a user’s end-to-end journey, we can help others visualize what an experience will look like before building it. My workflow for these experience stories includes the creation of supporting content so that wireframes and digital prototypes feel real enough that they capture the essence of what a user will be doing. When figuring out what content is needed, I always ask myself the same set of questions:

- What’s the result/outcome they’re looking to get to?

- How will they shape their content to get to the result?

- What are the steps to getting there?

- What content is the user starting with?

“Prior to Firefly, I was illustrating stories through template manipulation and manual content creation using Express, and my process started with looking for a template that could communicate what a user would be doing. For example, if I was exploring what a small business owner would be creating, I would create a fake company to illustrate that. If the content required variations, I would have to manually modify them—each pattern, color, and alteration was a separate task. Since the beta launch of Firefly, I’ve been using it to generate content to show user journeys and options—and it’s exponentially sped up my work.

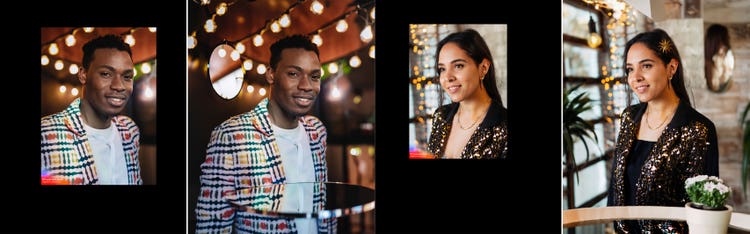

“This example workflow tells the story of how the new Dropbox, Google Drive, and One Drive add-ons would work in Express: The fictional user is a freelance book cover illustrator using Express to create book covers and the process shows how they would pull in images from various cloud storage services. It required a variety of images to make the experience feel realistic.”

The Firefly beta is open to everyone. Visit the site and experiment with how to use it in your work.

Ask Adobe Design is a recurring series that shows the range of perspectives and paths across our global design organization. In it we ask the talented, versatile members of our team about their craft and their careers.