Behind the design: Partner models in Adobe Photoshop

Bringing multiple generative AI models into a single experience marks a pivot point for Adobe's flagship application

Illustration by Davis Brown in Firefly Boards (edited using partner models in Adobe Photoshop)

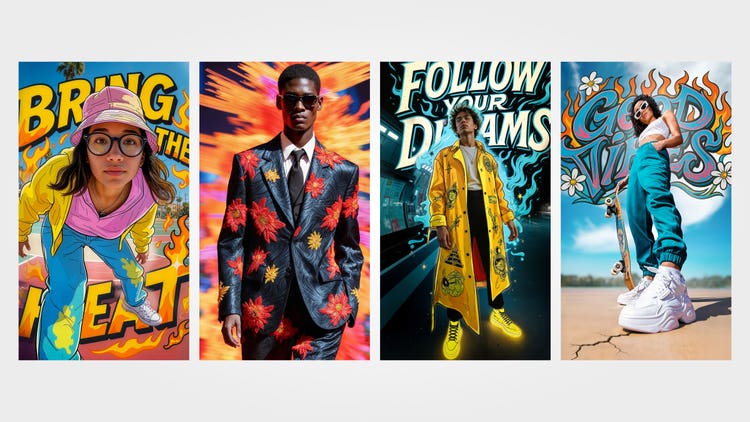

That’s the vision behind partner models. These third-party generative AI tools launched in Adobe Firefly earlier this year have now landed in Photoshop, promising to reshape the creative landscape. Models like Google Gemini 2.5 (Nano Banana), Black Forest Labs FLUX Kontext Pro, and Topaz Labs Gigapixel are now seamlessly integrated and empowering artists and designers to upscale, refine, and reimagine without ever leaving their artboard.

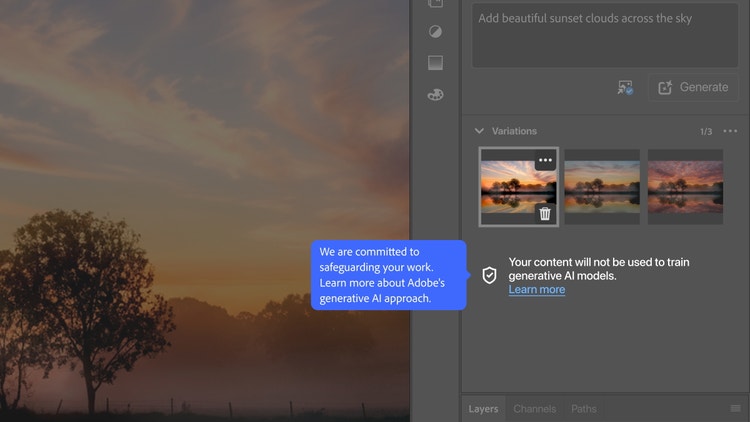

Trained outside of Adobe, these third-party models unlock a wider world of styles and workflows right inside Photoshop. Type in a prompt like “change the sky in this image to a sunset with swirling clouds,” and suddenly you’re able to explore it through multiple capabilities and styles. And the best part, no matter which model output you choose, your work stays yours—always protected and never used to train AI.

When Senior Experience Designer Davis Brown joined Adobe, he set his goals in writing: to inspire creatives through emerging technology while designing tools he loved to use himself. In 2019, he began experimenting with generative AI in his own creative practice. Not long after, he helped conceptualize Generative Fill, Photoshop’s first generative feature.

We spoke with Davis about how the vision for partner models unfolded in early 2024, when a cross-team collaboration—led by VP of Design Matthew Richmond, Venture Lead James Ratliff, Staff Experience Designer Heather Waroff—began charting the future of generative AI in Adobe’s professional tools.

What was the primary design goal when you set out to design the partner models experience?

Davis Brown: In 2024, using different generative models required having multiple subscriptions or using open-source tools built for developers. That left only advanced users able to access the latest AI technology. Embodying Adobe’s philosophy of Creativity for All, we set out to create an all-encompassing experience where anyone, regardless of technical expertise, could create anything they imagined.

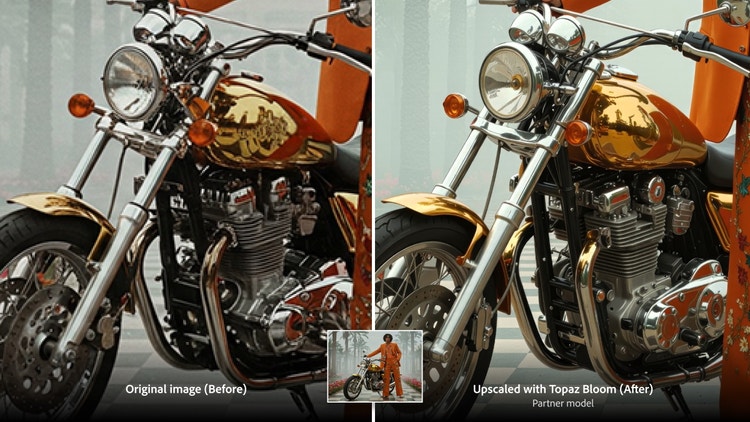

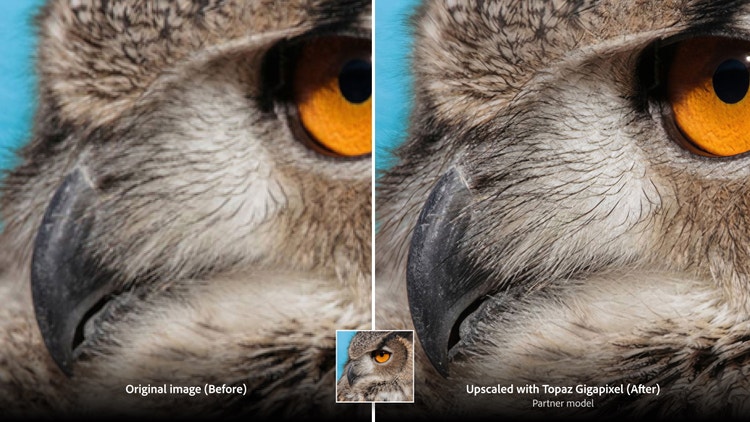

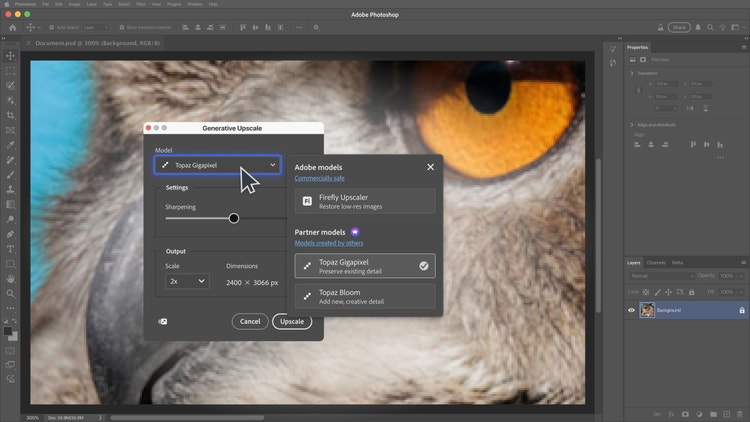

The implementation of partner models into Generative Upscale came from the idea of making upscaling technology easily accessible to all Photoshop users. Like putting on a pair of glasses and finally seeing an image that was previously blurry, upscaling increases image resolution for greater detail, sharper quality, and print-ready results. It pairs well with generated images, which frequently have unexpected artifacts or skin that’s overly smooth and unnatural.

Making AI models easily accessible was also inspired by my work as a creator and using this technology on nights and weekends. I was upscaling images almost every day and spending over $45 a month on generative models developed outside of Adobe. I knew I’d much rather use this technology inside Photoshop, where I was already working and had precise control. When I shared my work on social media, I realized I wasn’t alone—other artists and designers wanted the same.

What user insights did you leverage to help inform the design solution?

Davis: I'd like to call out Staff Experience Researcher Roxanne Rashedi, Senior Research Manager Laura Herman, Principal Experience Researcher Wilson Chan, Senior Experience Researcher Natalie Au Yeung, and Product Manager Carlene Gonzalez, who gathered insights that shaped Adobe's approach. Some of their impactful findings included:

- Resolution and sharpness gaps: In-app feedback showed “poor resolution” (low resolution and lack of sharpness or focus) as a top pain point in Generative Fill. This insight was crucial for presenting a case to leadership to build Generative Upscale and invest in outside model partnerships.

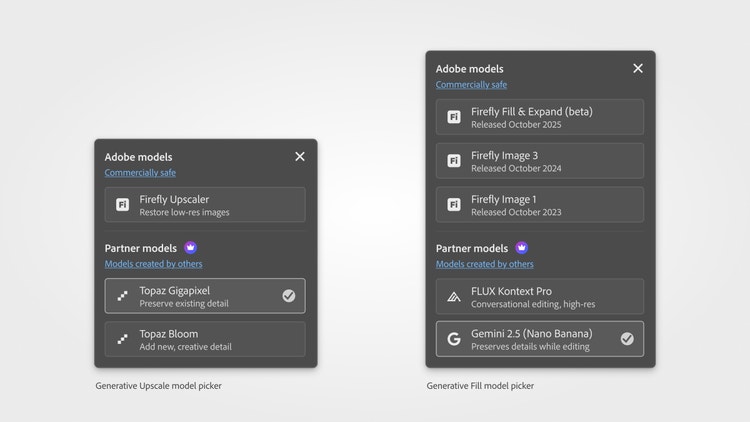

- Cross-model workflows: Many of our customers use other image generation models and then bring that work into Photoshop. We knew we could reduce the friction of moving back and forth between apps by consolidating models in Adobe tools. The idea evolved into the current model picker menu.

- Photoshop-specific needs: Photoshop users emphasized the need for AI-enhanced editing workflows. Rather than generating images from scratch, users wanted to “elevate the details in my image.” To address this, we embedded partner models into editing workflows, like Generative Fill and Generative Upscale.

- Commercial safety transparency: There were concerns that non-Adobe models could generate content unsafe for work or commercial use, reinforcing the importance of Adobe’s focus on safety, reliability, and transparency. We knew we’d have to clearly state in the model picker menu that Firefly is the only model that meets Adobe’s criteria for commercial safety.

- Data training transparency: Customers ranked clarity around data training just below image quality—it underscored Adobe’s commitment to not train on customer work and the importance of making that clear. Whenever someone provides feedback, uses a partner model, or creates a generative layer, they see a clear message—accompanied by a shield icon—that their content will never be used to train AI models. That commitment isn’t just Adobe’s; our partnership agreements also require that external model providers never use customer work for training generative AI.

What was the most unique aspect of the design process?

Davis: Collaborating directly with external partner companies to shape how their models would live inside Adobe apps was the most unique aspect of the design process. Presenting to the CEOs of model providers I admire was a true highlight.

Those conversations, often reserved for product management, truly gave design a seat at the table. They also created an opportunity to share Adobe’s vision for the future of generative AI and how model partnerships could help us shape it. For example, during our research for Generative Upscale, we discovered that text prompts for detail settings could be confusing. Since Photoshop users need maximum identity preservation (upscaling a family photo should sharpen details without altering facial features), we shared those requirements with Topaz so the model settings could be adapted for clarity and control.

What was the biggest design hurdle with this project?

Davis: Partner models wasn’t just another feature launch; it marked a pivotal shift for Adobe. Bringing other models into our applications means redefining how we talk about technology, privacy, and data training. Securing leadership buy-in was essential for such a major change, and one of our biggest hurdles was communicating our vision through design-led presentations.

The project required stretches of late nights and weekend work to keep the feature moving forward. Product, engineering, and design teams were all fighting the same battle, weighing trade-offs in resolution, speed, cost, and quality to determine the best possible experience for our users.

One of the biggest design hurdles was making it clear to people when they were using an Adobe model or a partner model (a premium feature). To address this, we clearly divided Adobe and partner models into two separate categories, listed as the active model in the contextual task bar, properties panel, and progress bar. We stored the model information in the generative layer. We couldn’t have overcome the model picker and premium experience design hurdles without the work of Staff Designers Dana Jefferson and Daniela Caicedo, Senior Design Manager Michael Cragg, Senior Staff Designer Kelly Hurlburt, and many others.

How did the solution improve the experience?

Davis: Bringing partner models to Adobe wasn't just a one-time solution. Incorporating third-party models in our applications would opencountless new workflows that were previously not possible, and our designs had to address those.

What did you learn from the design process?

Davis: This project had plenty of pivots and challenges. But three things stand out from the experience:

- Real change takes cross-team alignment and the right timing. Large-scale change in a big company can't always come from a small team sprinting to be first. It takes alignment across research, product, engineering, business, and marketing. Timing matters, too. An idea can be right and still be tackled too early. Teams need to know when not to force things—and conserve energy for when a moment lands.

- This project reinforced design’s role in helping leadership, product management, and marketing better understand what improves the user experience. When there is pressure to move fast, it’s important to pause, ask why, and speak up when something seems off. Having an early voice at the table can prevent bigger problems from cropping up and keep everyone moving in the right direction.

- I saw the importance of partnering with PMs early to align on business models and cost. AI features can be expensive, and if you find out too late that something isn’t financially sustainable, months of work can disappear overnight. Pricing and operating costs must be confirmed up front to avoid derailment later.

What’s next?

Davis: Photoshop has been part of my life since elementary school. I learned how to use it by watching my dad, Russell Brown, demo the tools he helped create. Meeting the original team and now working alongside many of them is a true honor.

As a designer and artist, I know people create their best work when they can get lost in the details, fall into a flow, and have fun while they work. Play sparks invention, inspiration, and imagination, and should always be at the heart of any creative process. The future will be about giving people more control to direct their vision, while still allowing for precision, depth, and the freedom to go beyond a simple prompt. As generative AI evolves—expanding into video, agent-driven workflows, and node-based systems—I’ll keep exploring, making art, and finding new ways to inspire others.

I hope a new generation discovers Photoshop just as I did, and that it stays the industry standard, and the technology-driven tool creatives continue to reach for.