Designing for generative AI experiences

Shaping interfaces for artificial intelligence requires leaning into specific design skills

Illustration by Karan Singh

I'm now a senior experience designer on Adobe Design’s Machine Intelligence New Technology (MINT) team, and alongside the quantum leap that generative AI has experienced there’s been a paradigm shift in user expectations for the digital products that incorporate it. People want seamless experiences, personalized recommendations, and adaptive systems that cater to their unique needs and understanding.

Those new expectations are creating an expanding need, and a compelling opportunity, for UX designers to evolve the practice of designing for more static interfaces and begin crafting more natural, intuitive, personalized, human-centered experiences. The shift won’t require developing new or different sets of skills, but it will require leaning into those that are most useful.

The role of design in artificial intelligence

AI models are great at detection and pattern recognition (like spotting faces in a crowd). They’re also great at classification (they can neatly organize a jumble of data), prediction (using historical data to forecast), and can make recommendations based on human interaction (learning from previous selections and preferences). They can also synthesize and generate text, images, video, and audio by weaving fragments of information into new creations.

But even with its impressive capabilities, AI walks a fine line between predictability and unpredictability. While it can unfailingly recognize patterns and classify data, there's a degree of nuance that it often misses (like human anatomy). Because models can only make sense of what they’ve seen before, their ability to grasp the idiosyncrasies of new data is limited. Finally, and most importantly, AI algorithms may not accurately capture or portray human emotion, cultural context, or the depth of personal experience.

When designing interfaces that incorporate AI, designers must never overlook the needs of the people behind each touch and interaction. The foundational focus of designing for them should be to create a reciprocal relationship between the technology and the people using it that evolves in tandem with each technological leap. When there’s balance between users, design, and technology, it fosters a cyclical ecosystem where each enhances the other:

- Users, integral to the design decision-making process, provide insights and feedback that inform the development of AI systems.

- Designers leverage user feedback to enhance the value proposition of AI solutions, ensuring that they meet user needs.

- As AI technology evolves, designers adapt user experiences with enriched interaction and more personalized and contextually relevant solutions.

Key responsibilities of AI designers

Designing experiences is as important as designing the underlying algorithm, infrastructures, and data of the models. Designers not only have the power to shape the future of AI, they also have the responsibility to ensure that the technology isn’t just intelligent, but also approachable, useful, and aligns with human value. They can do that by facilitating control, personalizing digital experiences, and building understanding and trust.

Facilitating agency and control

When designing for AI, designers must consider how to amplify human agency in the AI experience and make room for people’s choices and decisions. Designing the experience for text to image in Adobe Firefly began simply: generate images by typing in a prompt. However, when we put ourselves in the shoes of users, we realized how intimidating an empty prompt field can be—crafting the right input may require the user to be equipped with professional knowledge from multiple creative fields and art movements.

To help people better understand and adopt the new task of prompt-writing, the prompt bar on the Firefly homepage uses a familiar search-like interface, where users can enter a prompt in much the same way that they would start a web search. But unlike search bars, a sample prompt describing the background image automatically fills the prompt bar to educate first-time visitors.

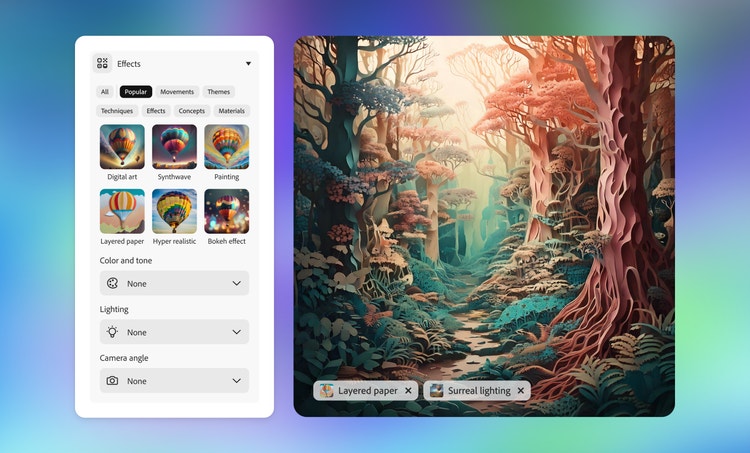

Additionally, to reduce the creative blocks that can arise during prompt writing, and to give people more agency over generated images, we created a panel with controls, presets, and parameters that people can use to refine prompts and generated results. Displaying style options, with corresponding categories and thumbnails, fosters a sense of ownership, builds a deeper connection between the user and AI, and encourages people of all skill levels to experiment.

Personalizing and contextualizing experiences

In today's digital landscape, designers must understand that users want tailored and contextualized experiences (those that are maximally relevant to their individual needs). In UX design, contextual understanding recognizes a user’s environment, needs, and situations and adapts experiences to fit them.

Contextual understanding is at the heart of delivering personalized experiences, even those deeply embedded in workflows. On the one hand, the contextual task bar in Adobe Photoshop reveals relevant information, actions, and features during a specific user journey. On the other, AI features like Generative Fill contextually understand image and input to update a section of an image in a consistent style (behind the scenes, AI predicts and fills in missing information based on contextual cues, minimizing the need for manual input).

Multi-modality (the ability to interpret multiple types of inputs like text, voice, or images) and dynamic interactions (a continuous and responsive interaction that adapts to user input in real-time) create rich, flexible, personalized, and contextual experiences. In Project Neo, users can easily create and modify shapes using multiple modes of interaction (3D shapes or multiple camera angles). It’s a multifaceted approach that caters to diverse user preferences and skill levels by enabling people to engage with content in personalized and intuitive ways.

Building understanding and trust

A large part of designing for AI experiences is building empathy between the user and the model to mitigate potential risk. A big step toward doing that is improving explainability/interpretability (providing clear rationale for a model’s decisions and outcomes) so people can make sense of what's happening and why.

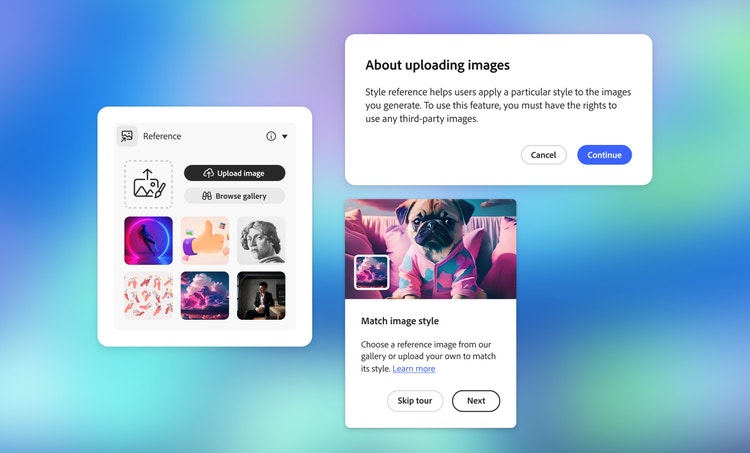

To improve the explainability of Firefly’s Style reference feature, we introduced several design elements to inform people about how AI could enhance the experience. An onboarding tour provides a quick look at the feature and how data is stored. Later in the upload flow a pop-up modal communicates, "You should own this uploaded image” to help people understand the guidelines for uploading reference images.

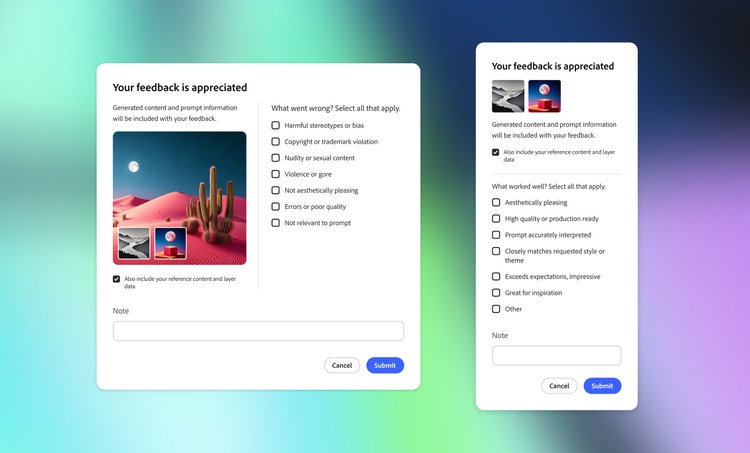

Design can also make experiences more participatory by reducing jargon to explain the potential issues and abilities of AI. By designing clear feedback systems (and inserting them at the right moments in a user journey) and aligning with people’s mental models of AI's abilities and limitations, users won’t be easily caught off guard by incorrect or unexpected outputs. And, by predicting and learning from error conditions, designers can build trust and confidence in AI systems. In Firefly, we've made it easy for people to provide feedback and report issues. That explicit feedback allows designers to continually refine AI model quality to enhance the overall experience and it helps build trust by allowing users to make decisions about data provision.

The soft skills UX designers need to design for AI

The practice of experience design is built on the understanding of our users. Empathy, a skill of UX designers, is undeniably valuable in designing for AI, but there are other qualities that make people particularly well-suited to this type of design work:

- Thriving in the unknown. AI is a rapidly evolving landscape, and technological research constantly pushes the threshold of innovation. In this environment, projects may not be predictable or clear. Designing for AI requires a level of comfort in navigating gray areas, exploring multiple paths, and iterating as you learn. That sort of openness to ambiguity, coupled with the ability to leverage user insights, can help designers craft experiences that feel intuitive even within the unknowns of AI.

- Innate curiosity. Staying curious about new technologies and learning about the intersection between technology and creativity is an integral part of my daily routine. I actively seek opportunities to experiment with AI-driven tools, attend workshops and conferences to stay updated, engage with communities of like-minded innovators to share ideas and perspectives, and, as much as possible, experiment with and experience new machine learning models.

- Adaptability. Five short years ago, image generation technology could only yield blurry, abstract results. Today there’s much more control over generative outputs than ever. Designing for AI requires the ability to pivot quickly to integrate new data, models, controls, and user feedback. It also requires close collaborations with other stakeholders such as researchers, product managers, and engineers. Adaptability enables fluid responses to changes in AI capabilities and evolving user needs.

- Innovating beyond current boundaries. One of the most exciting aspects of designing for AI means working on cutting-edge and emerging technologies—often with tools and possibilities that haven’t been fully explored. People interested in this field of design must feel comfortable thinking ahead to predict the future and look for ways to leverage technologies to create new experiences that may go beyond current user expectations.

- A systems mindset. AI designers cannot forget about the larger ecosystem they’re designing for. Thinking holistically allows us to consider how different components interact with and affect each other, as well as how each design decision could affect the rest of the ecosystem. Systems thinking helps create coherent, reliable, and ethical AI experiences that feel seamless to users.

Ways to expand an experience design practice

AI is rapidly maturing. Alongside that rapid growth there’s a growing demand for skilled designers in a field that didn’t exist three years ago. UX design skills can serve as a base but it’s helpful for designers new to the field to understand fundamental concepts about artificial intelligence as well as a bit about what’s under the hood. Fortunately, there are multiple avenues for learning, collaboration, and innovation as well as steps designers can take to begin making a career transition into this specialty:

- Increase your data literacy. Familiarize yourself with the fundamentals of data science and machine learning. Understand key concepts like data preprocessing, feature engineering, and model evaluation. This will enable you to have more informed discussions with data scientists and engineers, fostering effective collaboration.

- Experiment with AI tools and platforms. If you’re extra adventurous and want to get hands-on experience with AI, explore online platforms that offer courses and certifications in AI and machine learning. There are also multiple platforms that provide resources and tutorials for designing and implementing AI models. By working on practical projects, you'll gain valuable insights and improve your technical skills.

- Engage with AI-focused communities. Connect (online and in-person) with designers, engineers, and industry experts to learn and discuss the latest developments and applications of artificial intelligence.

- Collaborate. Work closely with engineers, prototypers, user researchers, data scientists, and other stakeholders throughout the AI development process. Engage in cross-disciplinary discussions to bridge the gap between design and technical implementation.

As AI continues to be incorporated into digital products it will continue to create a compelling need for designers who understand the nuances of designing for those experiences. For experience designers, already accustomed to adapting design processes and approaches as advances in technology require, crafting natural, intuitive, personalized, human-centered experiences for AI will simply require leaning into and sharpening a set of skills they already have.