Behind the design: Adobe Content Authenticity app

How we built a new tool for creators to authenticate their work and signal an AI training preference

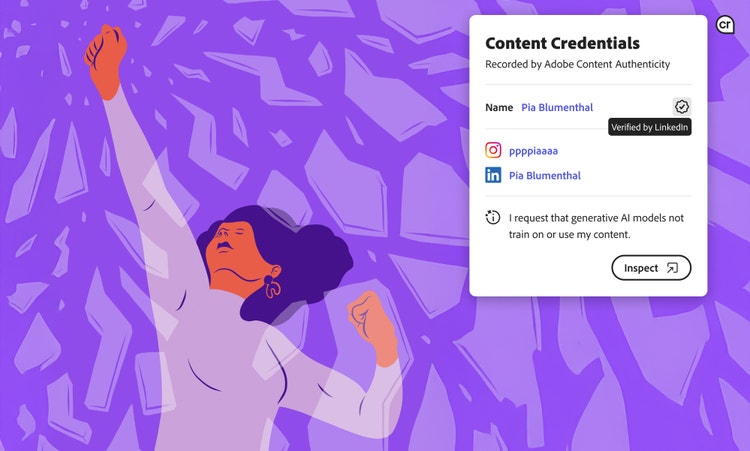

Illustration by Pia Blumenthal

Like a nutrition label for digital content, Content Credentials provide creation information about who made the content and when, and what type of edits happened along the way. Unlike other provenance solutions, they’re built on a trust model wherein they’re securely attached to content and validated by the tool used to attach them. They create a verifiable record of the creative process, bring information to the forefront, and help people understand the origins of digital content.

This system is collectively built by the Coalition for Content Provenance and Authenticity (CP2A), a Joint Development Foundation project that aims to provide publishers, creators, and consumers with flexible ways to understand the authenticity and provenance of media.

Pia Blumenthal leads product design for Adobe’s Content Authenticity Initiative team and co-chairs the C2PA UX task force. With the public beta of the Adobe Content Authenticity app, she talks about the work of helping creators maintain control over their content by adding verifiable attribution details about themselves and the preference to opt-out of generative AI training using Content Credentials.

What was the primary goal when you set out to design the Content Authenticity app?

Pia Blumenthal: Ensuring that creators have the choice to decide how their work is used, or not used, in training datasets. Creativity takes time, effort, and dedication—honing a craft, learning through mistakes, and developing a unique artistic voice. Generative AI is a powerful and exciting tool, but its development has often relied on training datasets built from creative works without permission.

Of course, the issue isn’t always AI itself—it’s about ensuring creators have a say in how their work is used, while allowing their audiences to verify their content origins. Many creators have expressed concerns that their content is being incorporated into AI models without their knowledge or consent, with no clear way to opt out. If society values creativity, it should also respect creators' ability to decide whether their work is used in AI training.

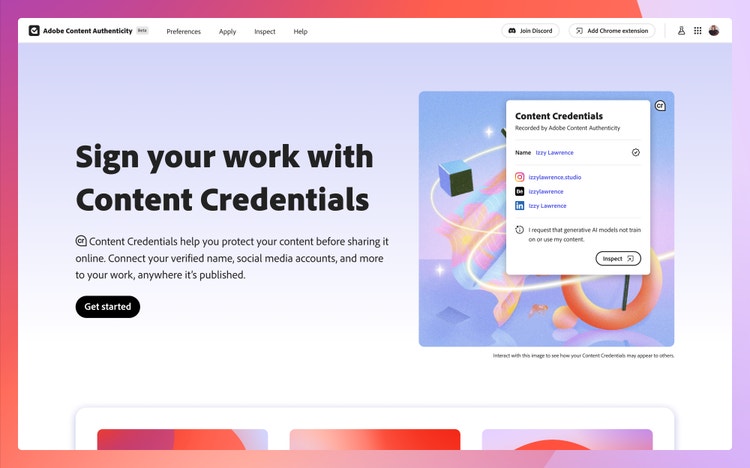

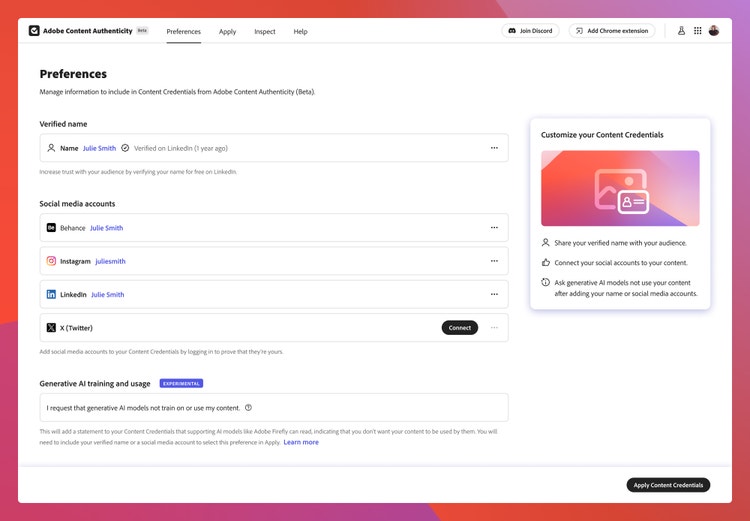

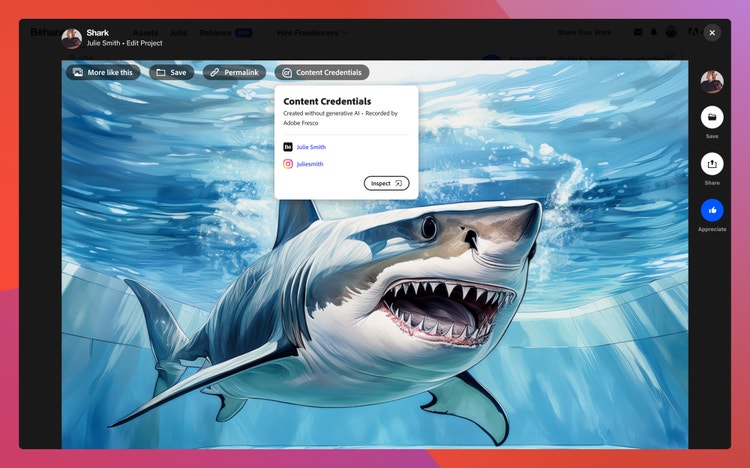

The Content Authenticity app is a way for creators to safeguard their work by adding verified identity and attribution details directly to their content and to request that generative AI models not train on or use their content using Content Credentials, which ties that request directly to the content itself. We added a live example of what this looks like on the landing page of the app. Hovering over the hero image reveals the “cr” icon, a truncation of Content Credentials, which signals the presence of provenance information. Rolling over the icon displays a popover with information about the creator and their work. Simple!

The secondary goal was to make it as easy as possible for people to apply Content Credentials to content they created in the past. The app allows creators to credential up to 50 files at a time, so they can more easily digitally sign their work before sharing it online.

What user insights did you leverage to help inform the design solution?

Pia: Over the years of designing the visual framework for Content Credentials, we’ve focused on learning what information creators want to include about themselves and their work, and what information to show to end viewers so that they can make more informed decisions about that content. For the app, we leveraged qualitative research studies focused on different types of creators, the types of attribution information they care about, and when and how they want to apply that attribution to their work.

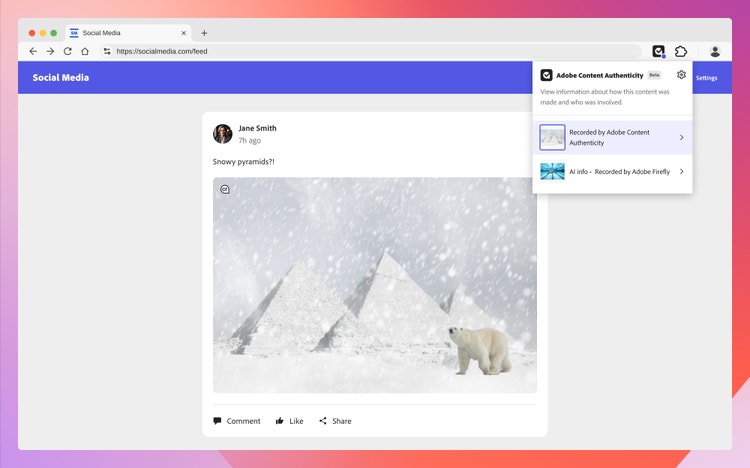

In a research study we conducted about the use of Content Credentials on social media, we found that one of the key trust signals for audiences is understanding who’s behind the content. They want to know who created it and they want to go to the creator’s account to learn more about who they are. Sharing content is a way to feel more connected with people, and posting online has become an important part of creative careers and promoting one’s work. To help people understand who’s behind content being shared online, we built several identity verification flows for creators to verify their names and social media accounts. Adding these types of identity signals helps creatives get the recognition they deserve.

We’ve also learned that creators have become more concerned about AI usage and the rights of artists. Because of the rapid adoption of generative AI technology, we’ve had to consider how to relay when something was created or edited with generative AI, and more recently, when artwork was specifically created without it. Creators have strong opinions about how their work is labeled, and of course, how generative AI models use it.

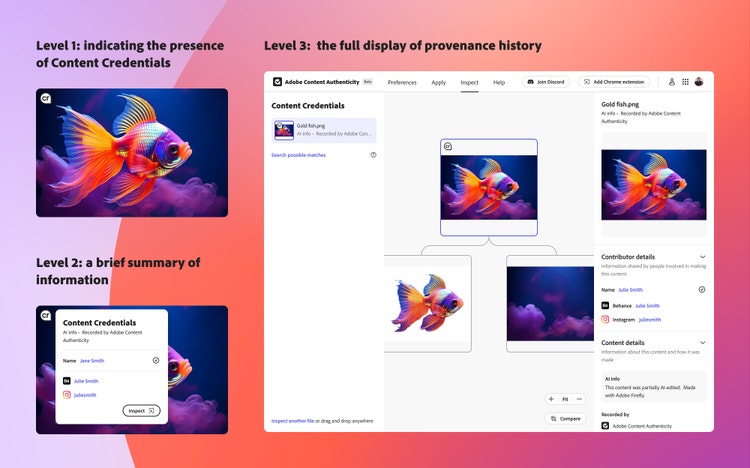

Hearing directly from creators and audiences informed how we built the app and subsequently display that information through a series of progressive disclosures. These kinds of disclosures include how we indicate the presence of Content Credentials, like the lightweight popovers that the Adobe Content Authenticity Chrome browser extension shows to signal them wherever they appear across the web, or the Inspect tool in the app that provides a complete overview of provenance.

What was the most unique aspect of the design process?

Pia: Designing the verified identity flow, that allows creators to verify their legal name and include it as part of their Content Credentials.

This work was done in collaboration with LinkedIn, Behance, and Adobe’s identity services team. LinkedIn allows their users to add verifications about themselves, like their legal names or workplace emails. Adobe collaborated with LinkedIn to incorporate its new Verified on LinkedIn feature, allowing allow Adobe customers to also include those verifications in Adobe Content Authenticity and Behance.

We wanted to enable creators to sign their work with their names in a way that has accountability built in. Since not all creators want to add their names to their work, whether due to privacy concerns or because they use a different name professionally, they can connect their social media accounts through OAuth consents, which let them verify account ownership without revealing personal details. Both forms of identity are important for supporting the content creator’s verifiability and ensuring their work is correctly attributed.

Our team here at Adobe helped create a novel approach to verified identity metadata through a complementary open extension to Content Credentials. Traditionally, identity could be manually added or updated in unsecured metadata fields, but the new verified approach adds another layer of traceability to who someone says they are, making identity assertions more trustworthy.

The designs for verified identities visibly show the verified status and who did the verification. Working with Adobe Design’s Icons team, we created a verification badge with an interactive state that shows when a creator has gone through the verification process. We aligned user needs across teams to build a centralized flow that can be launched from multiple entry points. Generic in design, so it can support future verification services, the badge appears on Behance and in the Content Credentials UI on the Adobe Content Authenticity app.

What was the biggest design hurdle?

Pia: Designing against an ever-evolving open standard. It’s an interesting experience to design for both specific Adobe products and audiences and to consider best practices for implementers following the Content Credentials open standards. Our team had to strike a balance between the metadata Adobe tools can record about a piece of content and how they might appear—or not appear—in surfaces beyond our product ecosystem.

Even across Adobe products, we’re still collecting user feedback and refining the experience of applying them, since our tools, workflows, and the customers who use them can vary greatly. For example, although we built the app for creators to quickly and easily attach identity information to their content, it doesn’t include statements about how that content was made. To add that information, a creator would need to open Adobe Fresco or Adobe Photoshop and opt into Content Credentials at the start of their work to show how it was created, and whether it was created with the support of generative AI tools.

Our eventual goal is to make the Content Authenticity app the primary hub for managing Content Credentials preferences and information (and settings) creators might want to include in across all supporting Adobe apps. The challenges of that work include making sure they’re easily discoverable across products, managing the different types of information products support, and ensuring that, as much as is possible, there’s a consistent UI so that Adobe-produced Content Credentials are recognizable wherever they appear.

How did the solution improve the experience?

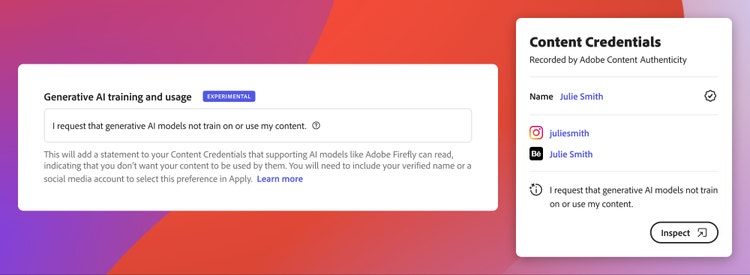

Pia: We hope to set the design standards for the application of verified identity and the generative AI training and usage preference, which means making the preference understandable and trustworthy while ensuring it has some meaningful impact... even in a complex digital ecosystem.

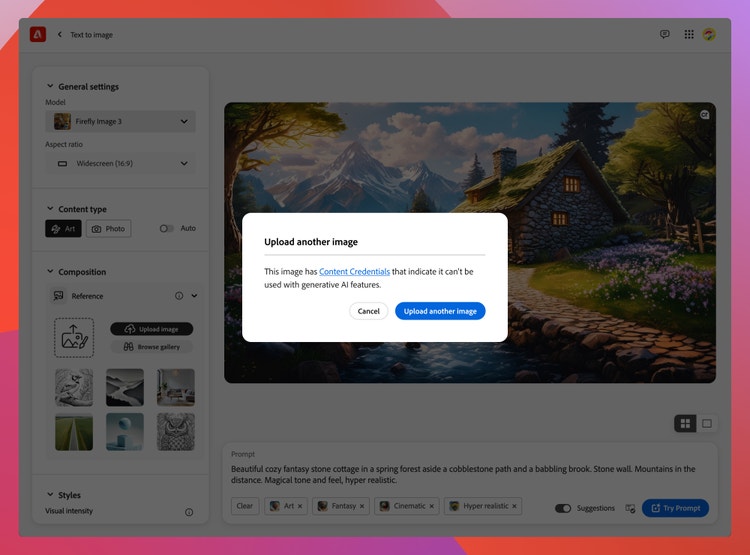

Creators need a straightforward way to set Content Credential preferences, one that eliminates confusion and makes it clear what these preferences do and don’t do. Adobe has been advocating for a practical, scalable, global opt-out technology standard and working closely with policymakers and industry partners to establish clear mechanisms for greater creator control for generative AI content. By integrating this feature into our app, we’re building the foundation for a more transparent, accountable, and creator-first future. By designing safeguards into the system, we strengthen its credibility and help ensure it’s used responsibly.

- Generative AI training and usage is labeled as an “experimental” preference. As we’re setting the foundation for greater creator control, we continue partnering with policymakers and industry partners to establish clear mechanisms for creator consent in generative AI training.

- The opt-out request is framed as an “I” statement with the creator making a request to third-party generative AI tools to not to use their work for training purposes.

- Identity verification ensures that only verified creators can set generative AI training and usage preferences, preventing bad actors from falsely claiming content. Integrating this preference ensures that the creator’s choice is embedded in their content in a verifiable way, making it easier for when global opt-out regulations take shape, and more companies start respecting creator preferences.

What did you learn from this design process?

Pia: Given the constant evolution and adoption of Content Credentials, I’m continually learning how to design for information and UI flexibility while trying to build on top of a foundational user experience. Introducing new assertions means we need to be quick to react and update our UX as needed based on how it’s adopted.

What’s next for the Content Authenticity app?

Pia: The app is just the beginning for centralizing identity and creation assertions for Content Credentials across Adobe. We’re already thinking about ways to integrate them more seamlessly into existing creator workflows, make management easier, and expand on the types of information creators may want to include about their content. On the viewer side, we’re continuously optimizing how they’re discovered and displayed, and through the collaborative work for the C2PA UX Task Force, we’re working on best practices to educate on and bring awareness to them.

The success of this effort doesn’t rest on a single company—it requires collective action. That’s why Adobe is continuing to work with policymakers, technology leaders, and creative communities worldwide to shape a creator-first ecosystem—particularly in the age of generative AI. However, meaningful change also requires action from the creative community. To ensure widespread adoption of this training and usage preference, creators must advocate for its implementation across platforms and seek greater control over their work in the digital space. Through a collective effort, we can build a future where AI respects and enhances creative expression.