What makes a learning experience good?

A design research framework for evaluating learning outcomes

Illustration by Shanti Sparrow

Software companies invest a lot of time and resources to help people develop their skills and teach them how to use their products. It’s a wide-reaching topic at Adobe where teams are not only looking at how to help people learn to use our applications but also how to help them grow their skills in creative domains like photography and illustration. To help people unlock their creative potential, our learning experiences range from in-app tooltips to hands-on tutorials and expert-led livestreams. Although there are a variety of ways to teach creative skills, not all are equally effective and one of our jobs as design researchers is to investigate learning behaviors and needs so we can decide how to best to support people trying to grow their creative skills.

To better understand the types of learning experiences that would benefit people most, Victoria spearheaded internal interviews with several teams across Adobe to identify the big problems that needed to be tackled. At first, teams seemed to have different, disconnected issues (e.g., “This is inspirational, but do people actually learn from it?,” “Is this learning intervention working?,” “How do we design better tutorials?”). But when we looked closely, we realized there was a shared underlying question: What’s actually “good” when it comes to learning and how can we make more effective decisions when producing that content? We realized that to help our teams make better decisions, we needed a standardized and rigorous method to assess the effectiveness of learning interventions.

We leveraged internal workshops and synthesized existing research to create a framework of learning outcomes, then piloted and refined that instrument in qualitative research. The result is the Learning Outcomes Assessment Framework (LOAF). It’s proven so useful in the short time we’ve used it at Adobe that it could help other researchers and designers tackling the complexities of creating “good” learning interventions.

What is the LOAF?

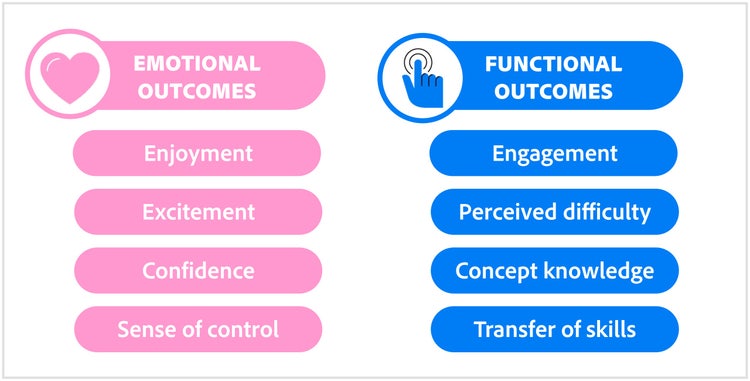

The LOAF (Vega Villar & Hollis, 2022) provides a framework and tools to objectively evaluate the success of learning experiences across Adobe’s product ecosystem. It’s based on the idea that for a learning experience to be good, it must have a positive impact on both functional and emotional outcomes. The LOAF includes assessment criteria for those two dimensions:

- Functional outcomes: Our goal is to deliver learning experiences that appropriately engage and challenge users to help them understand and use our tools. The LOAF assesses four functional outcomes:

- Engagement: In this context, “engagement” isn’t clicks, it’s thoughts. For tutorials to teach effectively they must engage people without overwhelming them.

- Difficulty: According to learning theory, learning occurs when a task is too hard to be completed alone but is achievable with help (Vigotsky, 1987; Schwartz, Tsang and Blair, 2016). Learning experiences should meet that optimal level of challenge.

- Knowledge: Becoming acquainted with and understanding new words and concepts is central to learning how to use software.

- Transfer: The goal is to give users the skills they need to achieve their own creative goals. In the LOAF, the “golden standard” of learning is that users can transfer what they learned to solve a new challenge (Bloom, 1956; Hansen, 2008).

- Emotional outcomes: If a learning experience is boring or discouraging, it doesn’t matter how much functional value it has. An effective learning intervention must feel good and foster motivation. The LOAF assesses four emotional outcomes:

- Enjoyment: Having fun during a learning intervention fuels intrinsic motivation to learn and is an immediate signal of success (Melcer, Hollis & Ibister, 2017).

- Excitement: If, after following a tutorial, a person is pumped to continue learning, we know we’re on the right path.

- Autonomy: Helping people feel in control of the experience empowers and motivates them to continue learning.

- Self-confidence: When someone has low confidence in their abilities, they’re more likely to give up on their goals (Silvia and Duval, 2001). Tutorials should instill confidence and leave people feeling equipped to meet new challenges.

How LOAF research is structured

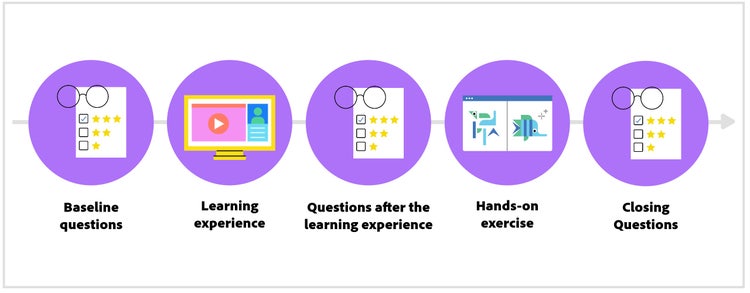

To assess the functional and emotional outcomes of a learning experience, a typical LOAF study uses a combination of rating scales, open-ended questions, and a transfer exercise (where we watch people as they try to apply their new skills to complete a new task). We use pre- and post-learning experience questions, a hands-on exercise, and closing questions to get at different dimensions of functional and emotional outcomes:

Questions to ask before and after the learning experience

Ideally, we want our learning experiences to demystify our products and nurture people’s motivation to use them. To capture possible changes in attitudes toward an application, we ask participants to indicate to what extent they agree with certain statements before and after completing the learning experience. The goal is to capture possible changes to emotional states before vs. after the experience:

- Enjoyment: If they’ve used the app before, what is their view on how enjoyable it is to use? (“Using Adobe Illustrator is an enjoyable experience.”)

- Excitement to learn: What is their baseline appetite for learning? (“I’m excited to learn how to use different tools in Adobe Illustrator.”)

- Autonomy: What are their existing views on having a sense of control with the app? (“I’m comfortable playing around with Illustrator.”)

- Self-confidence: How capable do they feel using the app? (“I personally find it easy to get things done in Illustrator.”)

Questions to ask after the learning experience

After the learning experience, we ask participants a few questions. The items in this questionnaire were adapted from various peer-reviewed scales (Ryan and Deci, 2000; O’Brien and Toms, 2013; Tisza and Markopolous, 2021; Webster and Ahuja, 2006) and are designed to capture essential dimensions of the different learning outcomes. The questions can vary depending on the focus of the study, but they should cover each concept as thoroughly as possible.

- Enjoyment: Was their experience a positive one? (“I felt frustrated during the tutorial.”)

- Excitement to learn: Did it increase their appetite for learning? (“I want to try another tutorial to learn how to use Adobe After Effects.”)

- Autonomy: Did they feel in control of the experience? (“During the tutorial, I understood what to do at all times.”)

- Self-confidence: Did the tutorial make them feel more capable? (“I feel confident in my ability to recolor an image competently using Adobe Photoshop.”)

- Engagement: Did the tutorial make them think? (“The tutorial spurred my imagination.”)

Aside from assessing learning outcomes, we also assess other dimensions, like a tutorial’s difficulty or usability, which can profoundly impact the overall experience. To assess difficulty and usability, we use a combination of participants’ responses but also observe key behaviors such as completion time, number of errors, and repeated attempts.

The hands-on exercise

A common way of assessing learning success involves asking quiz-like questions (e.g., “The tutorial prompted you to ‘group’ shapes. Please describe what ‘grouping’ means and why it might be important”). In early applications of the LOAF, we found that these questions weren’t great predictors of participants’ ability to apply their new skills in a hands-on exercise. That is, participants might be able to define grouping properly but fail to group objects, or they might successfully group objects while struggling to put into words what they were doing or why.

An alternative way to assess how well participants understood the gist of a tutorial (and to identify which parts resonated most/least) is to ask an open-ended question like, “Describe, in your own words, what you learned in this tutorial,” but asking participants to solve a new problem by applying the skills taught in the learning experience is the ultimate test of success.

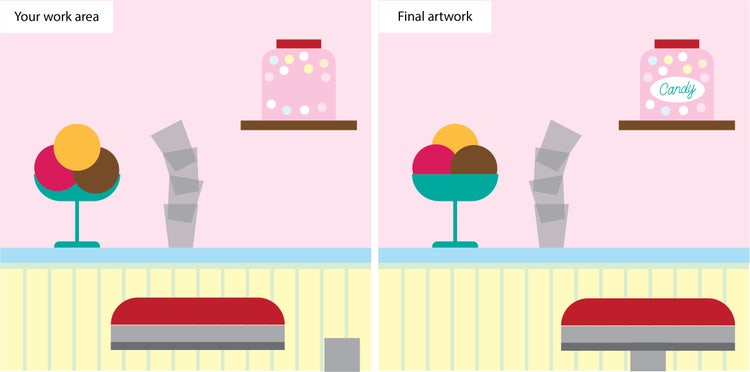

If, for instance, the tutorial taught participants to arrange and group shapes in Illustrator, the hands-on exercise will ask them to replicate a new target image by arranging and grouping shapes in a file. If the tutorial showed participants how to remove an element from an image in Photoshop, the hands-on exercise might ask them to remove a person from the background in a new photo.

As we do when assessing learning outcomes, we evaluate the performance of the hands-on exercise using a combination of observed behaviors (like completion time, repeated attempts, the percentage of tutorial tools/actions used, or off-task actions) as well as self-reported measurements (e.g., “I think the tutorial prepared me well for this exercise”).

How we’ve been using the LOAF at Adobe

The LOAF is for anyone interested in measuring learning experiences. So far, we’ve used it to assess various tutorial formats (like video or step-by-step hands-on instruction) in small, moderated studies, but also larger unmoderated studies. We’ve tested live tutorials that are widely available inside our applications, but also tutorial prototypes. Being able to study the effectiveness of tutorials in prototype has proven extremely useful because it allows stakeholders to choose from among various designs early in the process while being able to move forward with more confidence.

Mercedes has partnered with different teams across Adobe to help them use it independently. Throughout these partnerships, we’ve continued to refine our method, streamline its application, adjust it for different use cases and, of course, gathered information that’s helping us create better learning experiences.

When’s the best time to use this assessment approach? Based on sharing it internally, we’ve found that designers and researchers are particularly interested in it. But the LOAF’s adjustable framework makes it useful and suitable for evaluating the quality and impact of many learning experiences in an objective and systematic manner, such as:

- Comparing two tutorial formats for the same instructional content to provide design direction

- Investigating the impact of a learning experience across user segments and skill levels

- Measuring the effect of adding certain elements to a tutorial (like hints to help users find tools)

- Benchmarking the current state of our learning experiences and monitoring changes over time

- Analyzing the quality of learning experiences created outside the company (e.g., on YouTube).

The LOAF is relatively new, but it’s a good start to enable a systematic evaluation of our learning experiences so we can understand what works and why. It’s one more tool to help us design better experiences for humans because while the utility of an experience is important, how learning makes people feel is also critical.

This project would not have been possible without our wonderful collaborators: Amanda Dowd, Katie Wilson, Jenna Melnyk, Melissa Guitierrez, Jessie Smith, Tyler Somers, Alex Hunt, Shanti Sparrow, James Slaton, Sumanth Shiva Prakash, Jan Kabili, Brian Wood, Erin Wittkop-Coffey, Ron Lopez Ramirez, and Sara Kang.